Ubuntu 24.04 with ollama and webui

August 5th, 2024 7:30 PM Mr. Q Categories: Large Language Models (LLM)

Ollama and WebUI are powerful tools designed for working with large language models like GPT-3, offering fine-tuning capabilities to optimize pre-trained models for specific tasks or industries (Ollama) and providing an accessible, user-friendly web-based interface for easy interaction with AI-generated content (WebUI). These tools allow users of varying technical levels to collaborate on projects, customize models for improved performance, and deploy solutions on a wide range of machines with compatible GPUs.

One of the key benefits of WebUI is its support for private conversations, enabling users to interact with large language models through secure channels while keeping sensitive or confidential information protected during the AI-generation process. This feature makes WebUI particularly valuable for organizations handling internal communications and data, as well as for applications where privacy and security are paramount.

In addition to its privacy features, WebUI also allows users to extend and customize the interface to support additional functionalities or integrate with other APIs, making it a versatile tool for developers working on various projects. Ollama’s ability to fine-tune models for specific use cases and domains further enhances its usefulness in tailoring AI-generated content to meet unique requirements.

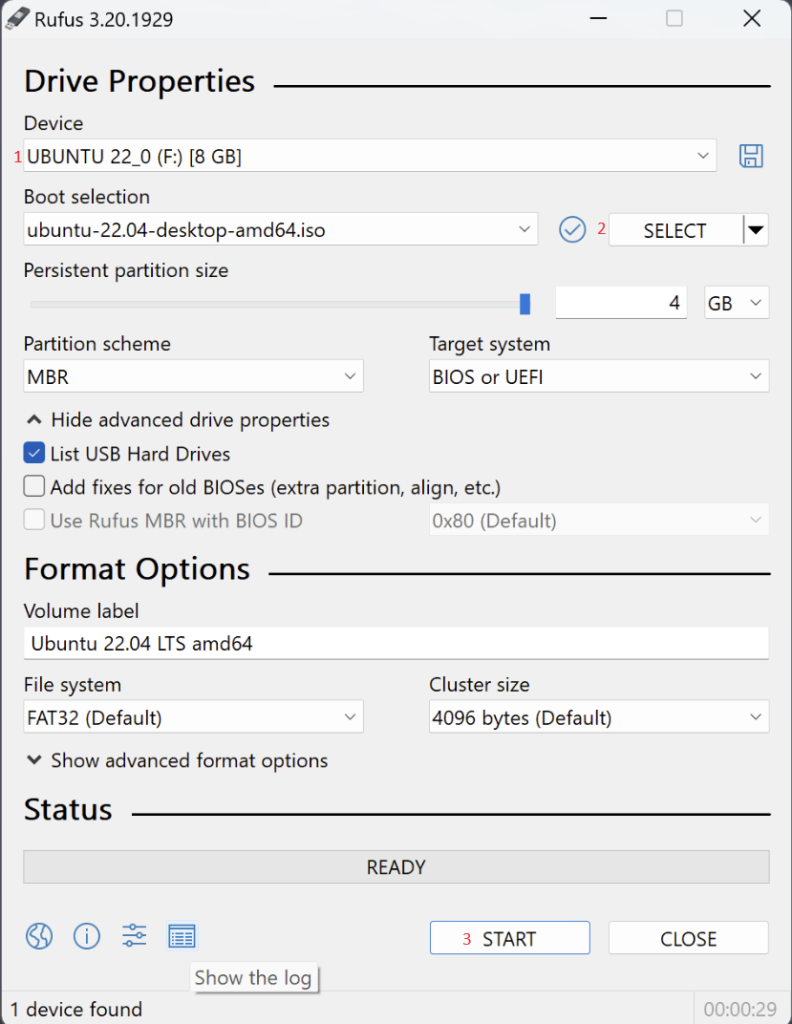

USB Image setup

Download ISO: ubuntu-24.04-desktop-amd64.iso

Download and install: https://rufus.ie/en/

- Select Device -> USB

- Select ISO for boot selections to be written to USB

- Leave default persistent partition size (below image is wrong)

- Start the image load to the USB

OS installation

Put the USB into a computer with an NVIDIA card (the larger & faster the better).

Make sure your BIOS is set to boot USB first.

Power on and follow instructions provided by Ubuntu.

OS Update

Launch a Terminal

$ sudo apt update && sudo apt upgradeInstall SSH (skip if not needed)

$ sudo apt install openssh-server -y

$ sudo systemctl enable sshInstall ollama and webui

Let’s get SNAP

$ sudo apt install snapdInstall ollama

$ sudo snap install ollamaInstall webui

$ sudo snap install open-webui --betaYou should now be able to visit the site at localhost:8080 if installed locally or xxx.xxx.xxx.xxx:8080 for remote server.

Validate services

Verify ollama is listening:

$ /etc/systemd/system$ sudo systemctl status snap.ollama.listener.serviceView output

● snap.ollama.listener.service – Service for snap application ollama.listener

Loaded: loaded (/etc/systemd/system/snap.ollama.listener.service; enabled; preset: enabled)

Active: active (running) since Mon 2024-08-05 21:59:30 EDT; 11h ago

Main PID: 1368 (snap_launcher.s)

Tasks: 16 (limit: 38362)

Memory: 27.8G (peak: 28.8G)

CPU: 44min 27.800s

CGroup: /system.slice/snap.ollama.listener.service

├─1368 /bin/bash /snap/ollama/15/bin/snap_launcher.sh serve

└─1484 /snap/ollama/15/bin/ollama serve

Aug 06 09:30:46 ai-chat ollama.listener[131449]: {“function”:”update_slots”,”level”:”INFO”,”line”:1640,”msg”:”slot released”,”n_cache_tokens”:1063,”n_ctx”:2048,”n_past”:1062,”n_system_tokens”:>

Aug 06 09:30:46 ai-chat ollama.listener[131449]: {“function”:”log_server_request”,”level”:”INFO”,”line”:2734,”method”:”POST”,”msg”:”request”,”params”:{},”path”:”/completion”,”remote_addr”:”127>

Aug 06 09:30:46 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:30:46 | 200 | 26.403213174s | 127.0.0.1 | POST “/api/chat”

Aug 06 09:32:46 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:32:46 | 200 | 82.836µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:34:04 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:34:04 | 200 | 4.075156ms | 127.0.0.1 | GET “/api/tags”

Aug 06 09:35:19 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:35:19 | 200 | 102.505µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:37:34 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:37:34 | 200 | 119.431µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:37:35 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:37:35 | 200 | 62.595µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:38:04 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:38:04 | 200 | 65.614µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:38:15 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:38:15 | 200 | 63.252µs | 127.0.0.1 | GET “/api/version”

lines 1-21/21 (END)

Verify webui is listening:

$ sudo systemctl status snap.open-webui.listener.serviceView Output

● snap.open-webui.listener.service – Service for snap application open-webui.listener

Loaded: loaded (/etc/systemd/system/snap.open-webui.listener.service; enabled; preset: enabled)

Active: active (running) since Mon 2024-08-05 21:59:30 EDT; 11h ago

Main PID: 1369 (run-snap.sh)

Tasks: 25 (limit: 38362)

Memory: 970.1M (peak: 1.0G)

CPU: 1min 19.133s

CGroup: /system.slice/snap.open-webui.listener.service

├─1369 /bin/bash /snap/open-webui/7/bin/run-snap.sh

└─1505 python3 /snap/open-webui/7/bin/uvicorn main:app –host 0.0.0.0 –port 8080 –forwarded-allow-ips “*”

Aug 06 09:37:35 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51544 – “GET /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2 HTTP/1.1” 200 OK

Aug 06 09:37:35 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51544 – “GET /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2/tags HTTP/1.1” 200 OK

Aug 06 09:37:35 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51544 – “GET /ollama/api/version HTTP/1.1” 200 OK

Aug 06 09:37:59 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51556 – “GET /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2/tags HTTP/1.1” 200 OK

Aug 06 09:38:04 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51556 – “DELETE /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2 HTTP/1.1” 200 OK

Aug 06 09:38:04 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51556 – “GET /api/v1/chats/ HTTP/1.1” 200 OK

Aug 06 09:38:04 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51558 – “GET /ollama/api/version HTTP/1.1” 200 OK

Aug 06 09:38:06 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51558 – “GET /api/v1/chats/4017b5a0-a8af-426f-b3d3-4647723ae6d3 HTTP/1.1” 200 OK

Aug 06 09:38:06 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51558 – “GET /api/v1/chats/4017b5a0-a8af-426f-b3d3-4647723ae6d3/tags HTTP/1.1” 200 OK

Aug 06 09:38:15 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51563 – “GET /ollama/api/version HTTP/1.1” 200 OK

View logs

$ journalctl -e -u snap.ollama.listener.serviceView log output

Aug 06 09:30:46 ai-chat ollama.listener[131449]: {“function”:”print_timings”,”level”:”INFO”,”line”:269,”msg”:”prompt eval time = 7062.49 ms / 2019 tokens ( 3.50 ms per token, 285.>

Aug 06 09:30:46 ai-chat ollama.listener[131449]: {“function”:”print_timings”,”level”:”INFO”,”line”:283,”msg”:”generation eval time = 4985.01 ms / 67 runs ( 74.40 ms per token, 13.>

Aug 06 09:30:46 ai-chat ollama.listener[131449]: {“function”:”print_timings”,”level”:”INFO”,”line”:293,”msg”:” total time = 12047.50 ms”,”slot_id”:0,”t_prompt_processing”:7062.495,”>

Aug 06 09:30:46 ai-chat ollama.listener[131449]: {“function”:”update_slots”,”level”:”INFO”,”line”:1640,”msg”:”slot released”,”n_cache_tokens”:1063,”n_ctx”:2048,”n_past”:1062,”n_system_tokens”:>

Aug 06 09:30:46 ai-chat ollama.listener[131449]: {“function”:”log_server_request”,”level”:”INFO”,”line”:2734,”method”:”POST”,”msg”:”request”,”params”:{},”path”:”/completion”,”remote_addr”:”127>

Aug 06 09:30:46 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:30:46 | 200 | 26.403213174s | 127.0.0.1 | POST “/api/chat”

Aug 06 09:32:46 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:32:46 | 200 | 82.836µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:34:04 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:34:04 | 200 | 4.075156ms | 127.0.0.1 | GET “/api/tags”

Aug 06 09:35:19 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:35:19 | 200 | 102.505µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:37:34 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:37:34 | 200 | 119.431µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:37:35 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:37:35 | 200 | 62.595µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:38:04 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:38:04 | 200 | 65.614µs | 127.0.0.1 | GET “/api/version”

Aug 06 09:38:15 ai-chat ollama.listener[1484]: [GIN] 2024/08/06 – 09:38:15 | 200 | 63.252µs | 127.0.0.1 | GET “/api/version”

$ journalctl -e -u snap.open-webui.listener.serviceView log output

Aug 06 09:35:19 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51491 – “GET /ollama/api/version HTTP/1.1” 200 OK

Aug 06 09:35:19 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51491 – “GET /api/version/updates HTTP/1.1” 200 OK

Aug 06 09:35:35 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51500 – “GET /api/v1/chats/03892c3d-f75a-4507-9342-d4a6e4abe9b5 HTTP/1.1” 200 OK

Aug 06 09:35:35 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51500 – “GET /api/v1/chats/03892c3d-f75a-4507-9342-d4a6e4abe9b5/tags HTTP/1.1” 200 OK

Aug 06 09:37:23 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51542 – “GET /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2 HTTP/1.1” 200 OK

Aug 06 09:37:24 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51542 – “GET /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2/tags HTTP/1.1” 200 OK

Aug 06 09:37:25 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51542 – “GET /api/v1/chats/03892c3d-f75a-4507-9342-d4a6e4abe9b5 HTTP/1.1” 200 OK

Aug 06 09:37:25 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51542 – “GET /api/v1/chats/03892c3d-f75a-4507-9342-d4a6e4abe9b5/tags HTTP/1.1” 200 OK

Aug 06 09:37:29 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51542 – “GET /api/v1/chats/03892c3d-f75a-4507-9342-d4a6e4abe9b5/tags HTTP/1.1” 200 OK

Aug 06 09:37:34 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51542 – “DELETE /api/v1/chats/03892c3d-f75a-4507-9342-d4a6e4abe9b5 HTTP/1.1” 200 OK

Aug 06 09:37:34 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51542 – “GET /api/v1/chats/ HTTP/1.1” 200 OK

Aug 06 09:37:34 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51544 – “GET /ollama/api/version HTTP/1.1” 200 OK

Aug 06 09:37:35 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51544 – “GET /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2 HTTP/1.1” 200 OK

Aug 06 09:37:35 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51544 – “GET /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2/tags HTTP/1.1” 200 OK

Aug 06 09:37:35 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51544 – “GET /ollama/api/version HTTP/1.1” 200 OK

Aug 06 09:37:59 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51556 – “GET /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2/tags HTTP/1.1” 200 OK

Aug 06 09:38:04 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51556 – “DELETE /api/v1/chats/1e5f79e4-8fcd-47f3-8331-7fe18aa6e1f2 HTTP/1.1” 200 OK

Aug 06 09:38:04 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51556 – “GET /api/v1/chats/ HTTP/1.1” 200 OK

Aug 06 09:38:04 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51558 – “GET /ollama/api/version HTTP/1.1” 200 OK

Aug 06 09:38:06 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51558 – “GET /api/v1/chats/4017b5a0-a8af-426f-b3d3-4647723ae6d3 HTTP/1.1” 200 OK

Aug 06 09:38:06 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51558 – “GET /api/v1/chats/4017b5a0-a8af-426f-b3d3-4647723ae6d3/tags HTTP/1.1” 200 OK

Aug 06 09:38:15 ai-chat open-webui.listener[1505]: INFO: xxx.xxx.xxx.xxx:51563 – “GET /ollama/api/version HTTP/1.1” 200 OK

Firewall Ports (Skip if not enabled)

Port that needed opened: 8080

Please use Firewall Rules page to complete this step.

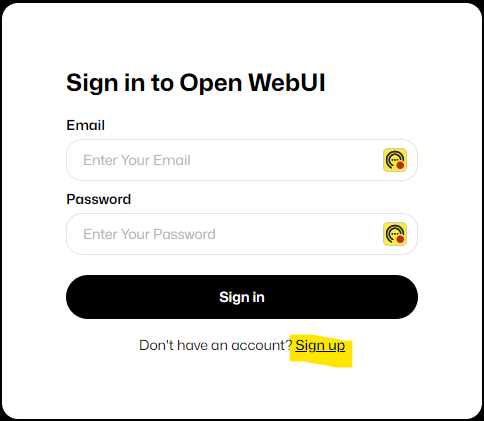

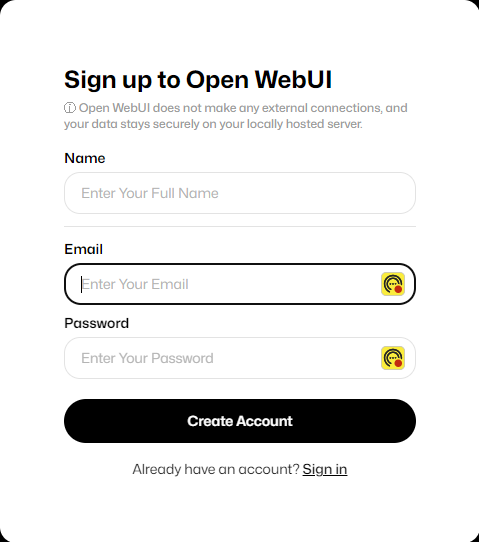

First login

To login the first time, click Sign up

The first user created will be the admin

You will be returned to the sign in page, do so…

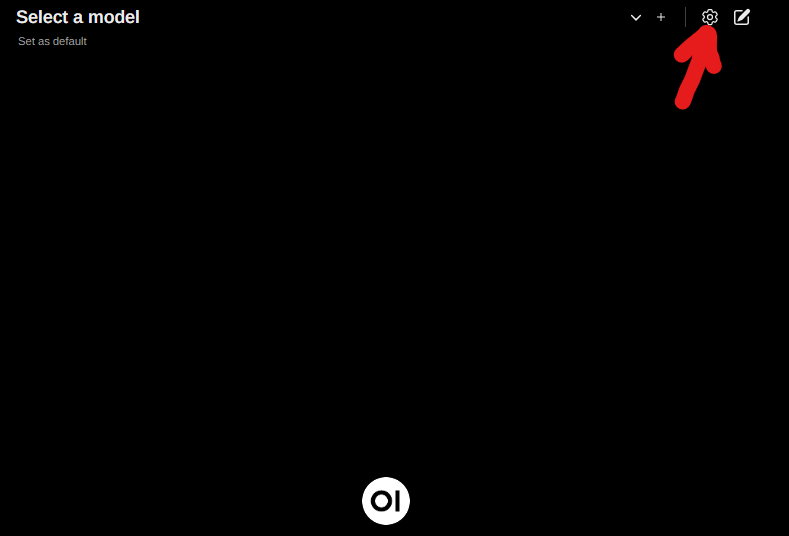

Install Models

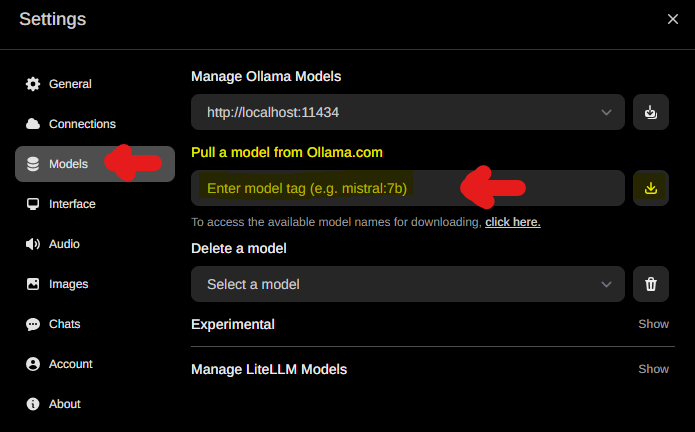

Excellent, things are working, let’s do some configuration. Select the COG at the top of the screen

- Select Models on left side

- Type in the model to download

- Available models: https://ollama.com/library

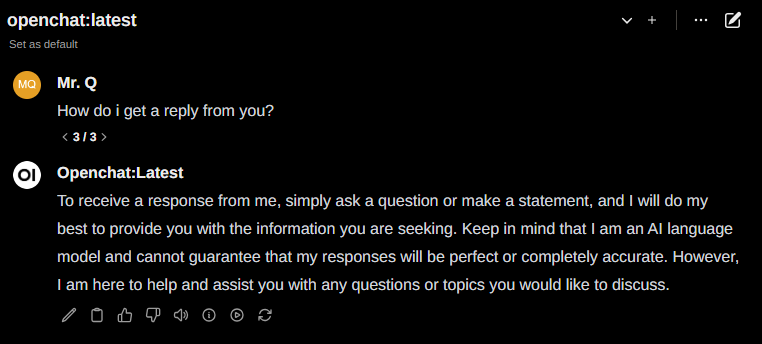

First chat

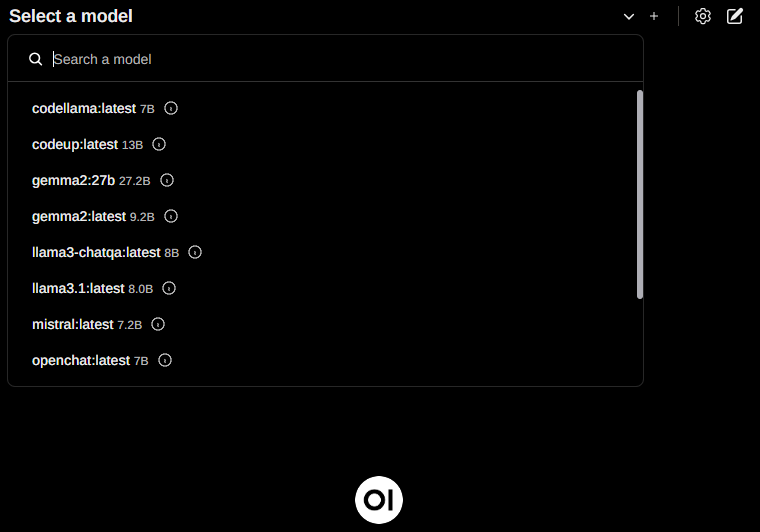

After you have downloaded some models, no model will be selected for your chat. At the top of the screen, click the downward carrot to see avaliable models and select one.

Now you can have a conversation:

Move my chat data

Export ChatGBT

Import ChatGBT

https://stackoverflow.com/questions/30251889/how-can-i-open-some-ports-on-ubuntu

Issue not finding NVIDIA card

Reference:

https://snapcraft.io/install/ollama/ubuntu

https://snapcraft.io/install/open-webui/ubuntu

https://stackoverflow.com/questions/30251889/how-can-i-open-some-ports-on-ubuntu

https://ollama.com/download/linux

https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

https://github.com/open-webui/open-webui

Things to Try:

Images: https://modelfusion.dev/integration/model-provider/automatic1111